Classic concurrency in iOS

In 2000, Apple released an open Unix-like operating system Darwin, which would serve as the foundation for the first version of Mac OS X – 10.0 the following year. This, in turn, would become the ancestor of all Apple operating systems, including modern macOS and iOS, and extending to watchOS on watches and audioOS on smart speakers in the future.

Darwin is built on XNU, a hybrid kernel that includes a microkernel Mach and some components from the BSD family of operating systems. In the context of this note, it is important to note that Darwin inherited the Unix process model and POSIX thread model from BSD, and it reimagined processes as tasks from Mach.

For working with threads, OS developers have access to the C library pthread, which is the first layer of abstraction in our operating systems. Although it is still possible to use pthread in your code to this day, Apple has never recommended using pthread directly. From the early versions of Mac OS, developers had an Apple abstraction layer over pthread called NSThread.

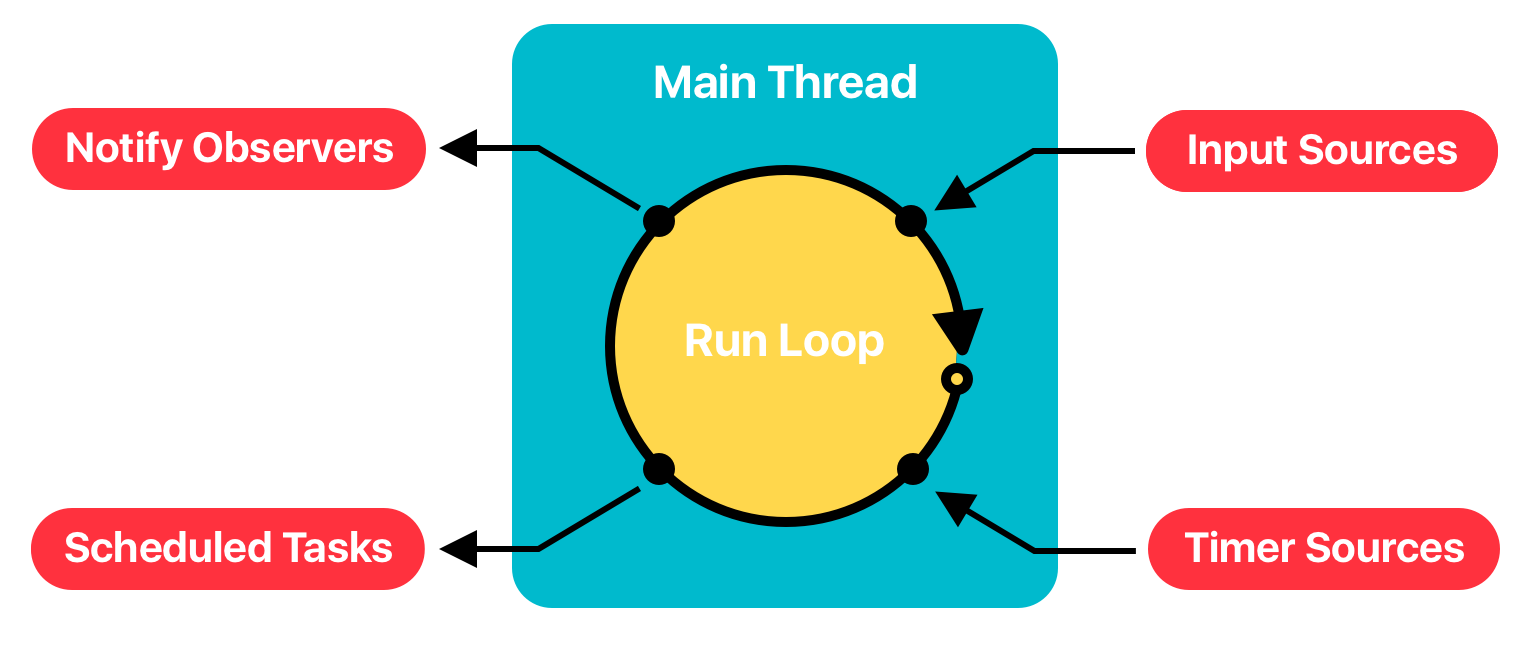

NSThread is the second layer of abstraction thoughtfully provided by Apple. Besides the more familiar Objective-C syntax for creating and managing threads, the company introduced RunLoop – a task and event servicing loop. RunLoop uses Mach microkernel tools (ports, XPC, events, and tasks) to manage POSIX threads, and it can put a thread to sleep when it has nothing to do and wake it up when there is work to be done.

Of course, you can still use NSThread today, but it’s essential to remember that creating a thread is an expensive operation because we must request it from the OS itself. Additionally, thread synchronization and resource access can be somewhat inconvenient for everyday development. That’s why Apple developers started thinking about solving the convenience issues of working with asynchronous code.

Grand Central Dispatch (GCD)

With the release of iOS 4, Apple elevated developers to another level of abstraction by introducing Grand Central Dispatch (GCD) and introducing the concept of queues and tasks to organize asynchronous code. GCD is a high-level API that allows you to create custom queues, manage tasks within them, handle synchronization issues, and do so as efficiently as possible.

Since queues are just an abstraction layer, underneath, they still utilize the same system threads, but the mechanism of their creation and usage is optimized. GCD has a pool of pre-created threads and efficiently distributes tasks, maximizing processor utilization when necessary. Developers no longer need to worry about the threads themselves, their creation, or management.

In addition to creating a new queue manually, GCD provides access to the main queue, where UI work is performed, and access to several system (global) queues.

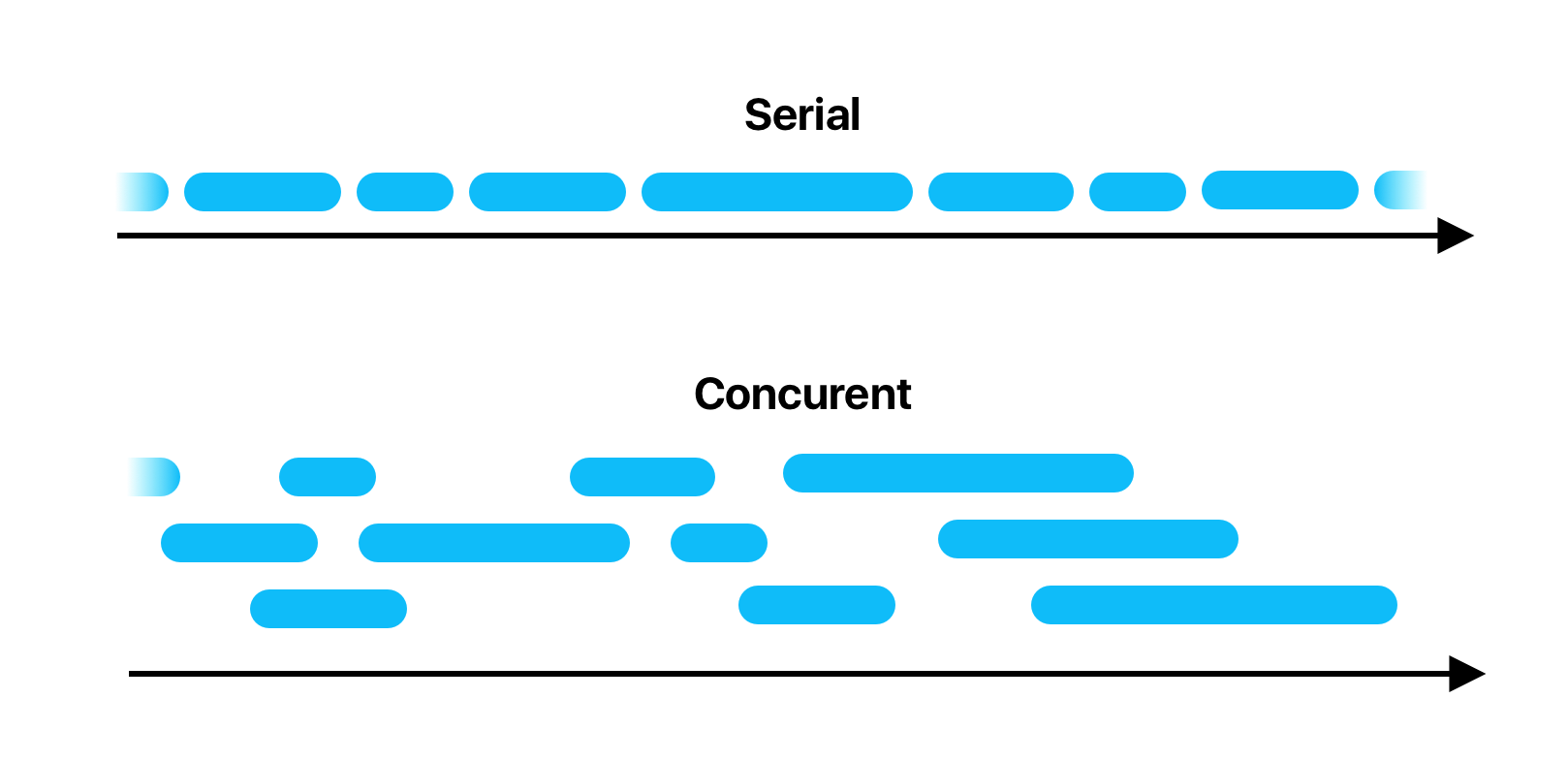

GCD queues come in two types:

- Serial queues – tasks are executed sequentially, one after another.

- Concurrent queues – tasks are executed simultaneously.

By default, a queue is created with serial task execution, and to create a concurrent queue, you need to specify it explicitly:

let queue = DispatchQueue("com.company.name.app", attributes: .concurrent)As mentioned earlier, GCD provides pre-created global queues with different priorities:

- global(qos: .userInteractive) – For tasks that interact with the user at the moment and take very little time.

- global(qos: .userInitiated) – For tasks initiated by the user that require feedback.

- global(qos: .utility) – For tasks that require some time to execute and don’t need immediate feedback.

- global(qos: .background) – For tasks unrelated to visualization and not time-critical.

⚠️ All global queues are queues with concurrent task execution.

Task Queuing

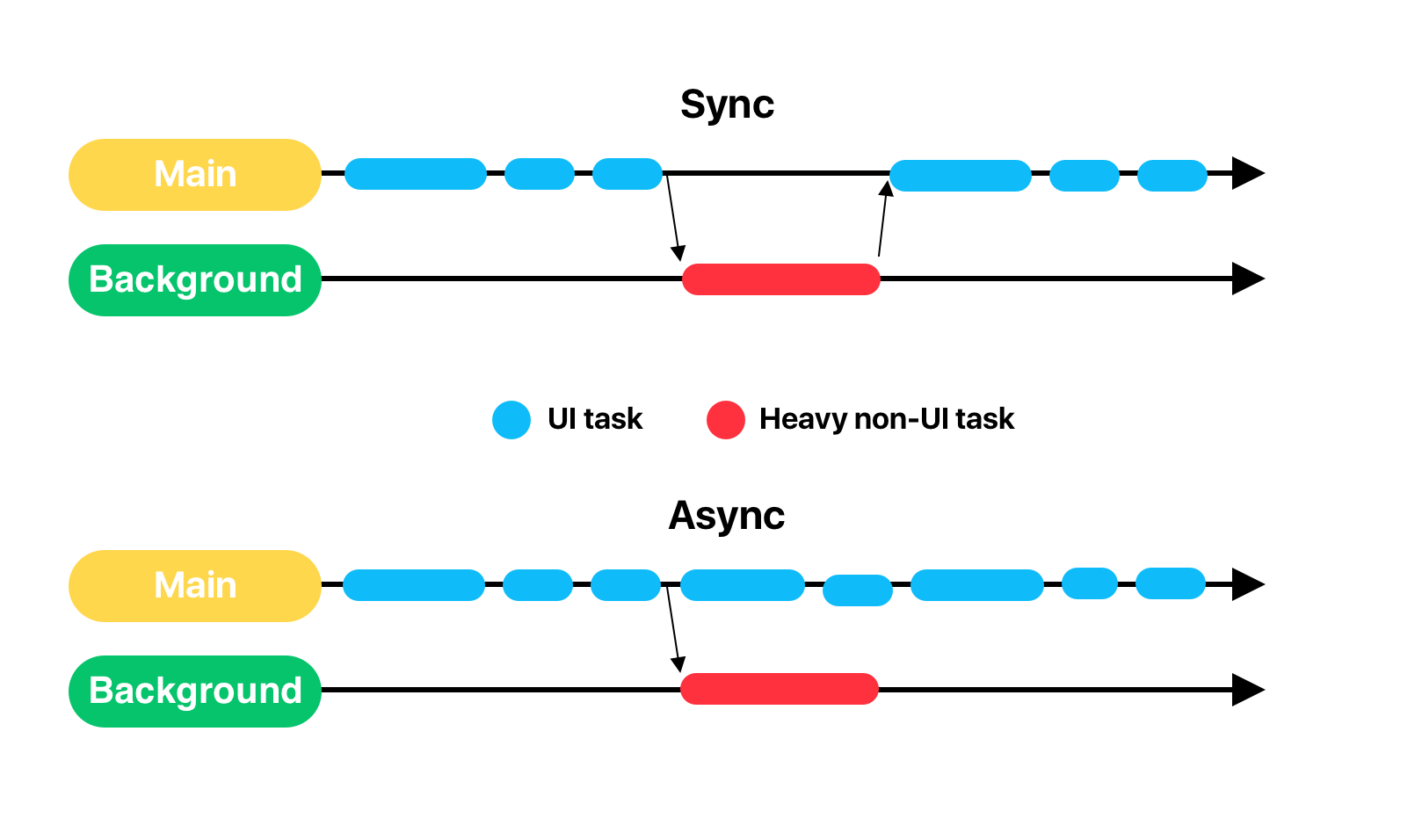

Tasks in any queue, whether concurrent or serial, can be enqueued synchronously or asynchronously. When a task is enqueued asynchronously, the code following the task enqueue continues to execute.

...

DispatchQueue.global().async {

processImage()

}

doRequest() // executes immediately, without waiting processImage() to finishIn the case of synchronous enqueueing, the code following it will not continue its execution until the task queued has been completed.

...

DispatchQueue.global().sync {

processImage()

}

doRequest() // will wait to finish processImage()DispatchWorkItem

DispatchWorkItem is a specialized GCD class that provides a more object-oriented alternative to using closures (blocks) for queuing tasks. Unlike the regular task enqueue, DispatchWorkItem offers the ability to:

- Specify the task’s priority.

- Receive notification of task completion.

- Cancel the task.

☝️

It’s important to understand that task cancellation works until the moment the task starts, meaning while it is still in the queue. If GCD has already started executing the code inside the DispatchWorkItem block, cancelling the task will have no effect, and the code will continue to run.

NSOperation

GCD provides a convenient abstraction for writing asynchronous code, both in terms of conceptual ideas and syntax. However, this wasn’t always the case. In Objective-C (and in the early versions of Swift), working with queues and tasks was not as convenient as in modern Swift, and it seemed to go against the very name of the programming language. Apple needed to provide an object-oriented alternative to GCD, and it did so by introducing NSOperation.

NSOperations are essentially the same as queues, with OperationQueue replacing DispatchQueue, and Operation replacing DispatchWorkItem. However, in addition to the object-oriented syntax, Operations offer two cool advantages:

- The ability to specify the maximum number of concurrently executing tasks in a queue.

- The ability to specify dependent operations, thus creating a hierarchy of operations. In this case, an operation will only start when all the operations it depends on have completed.

The latter point is very convenient for building a chain of requests when one request requires information from several others to execute.

class CustomOperation: Operation {

var outputValue: Int?

var inputValue: Int {

return dependencies

.filter({ $0 is CustomOperation })

.first as? CustomOperation

.outputValue ?? 0

}

...

}

let operation1 = CustomOperation()

operation1.start()

let operation2 = CustomOperation()

operation2.addDependency(operation1)

operation2.start()Errors and Issues

When discussing asynchronous and multithreading programming, it’s crucial to address some of the fundamental mistakes that developers can make when writing code that runs in parallel.

Race Condition

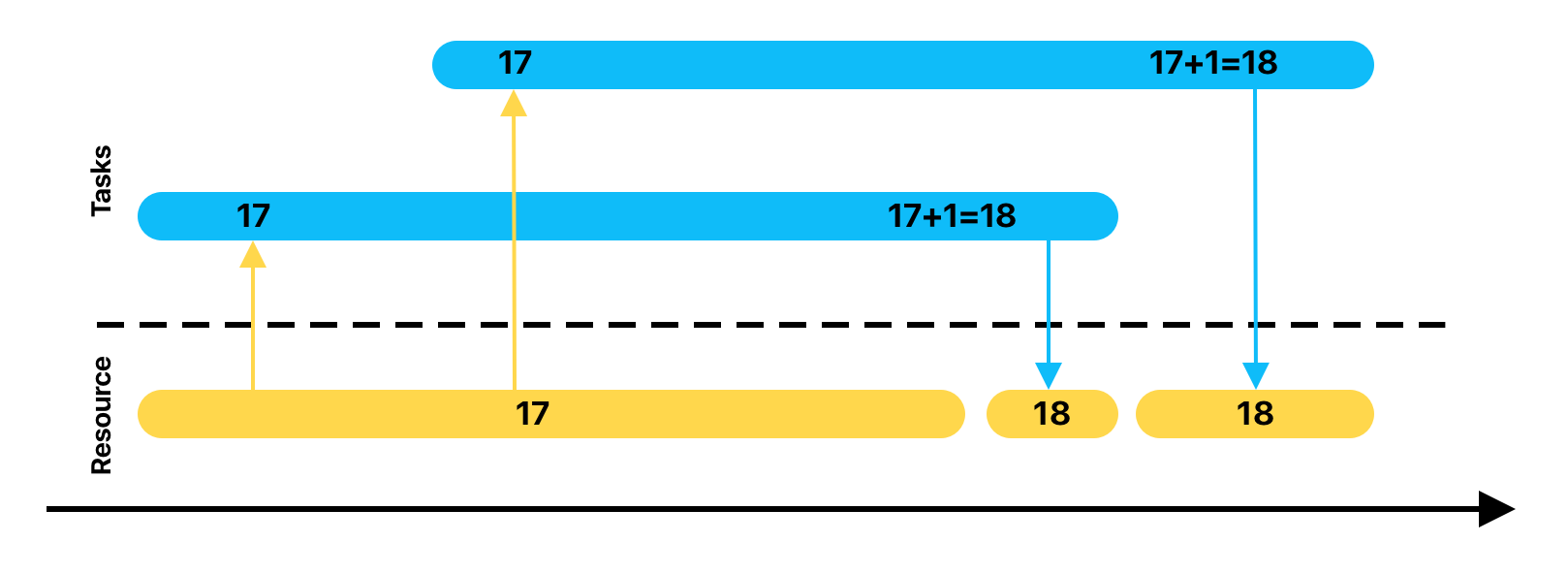

Race Condition is a design error in multithreaded systems where access to a resource is not synchronized, and the outcome can depend on the order of code execution. It may be challenging to grasp this concept without an example, so it’s better to understand it based on a specific case.

Illustration above shows two threads where some code is being incremented. To increment the value of a variable, the processor needs to perform three actions:

- Read the value of the variable from shared memory into a register.

- Increase the value in the register by 1.

- Write the value from the register back to shared memory.

As you can see in the illustration, the second thread reads the value of the variable before the first thread has had a chance to write the incremented value. As a result, one increment of the counter is lost, which can lead to both harmless bugs and serious issues, potentially resulting in a crash. This situation is a classic example of a race condition.

💡

The most serious consequence of a race condition is considered the case of the Therac-25, a medical device used for radiation therapy Therac-25. In this instance, a race condition led to incorrect values in a variable used to determine the operating mode of the radiation mechanism. This resulted in dangerous radiation overdoses to patients and underscores the critical importance of addressing race conditions in software, especially in safety-critical systems.

Deadlock

Deadlock, or mutual deadlock, is a multithreaded software error where multiple threads can indefinitely wait for the release of a particular resource.

Let’s break it down with an example: Suppose task A in thread A locks access to a resource A, let’s say it’s a SettingsStorage. Task A locks access to the storage, reads some values from there, and performs some calculations. Meanwhile, task B starts and locks access to resource B, which could be a database. To perform some calculations, task B also needs access to SettingsStorage, and it starts waiting for task A to release it. At the same time, task A needs access to the database, but it’s already locked by task B. This results in a mutual deadlock: task A is waiting for the database, which is locked by task B, which is waiting for the storage, locked by task A.

Priority inversion

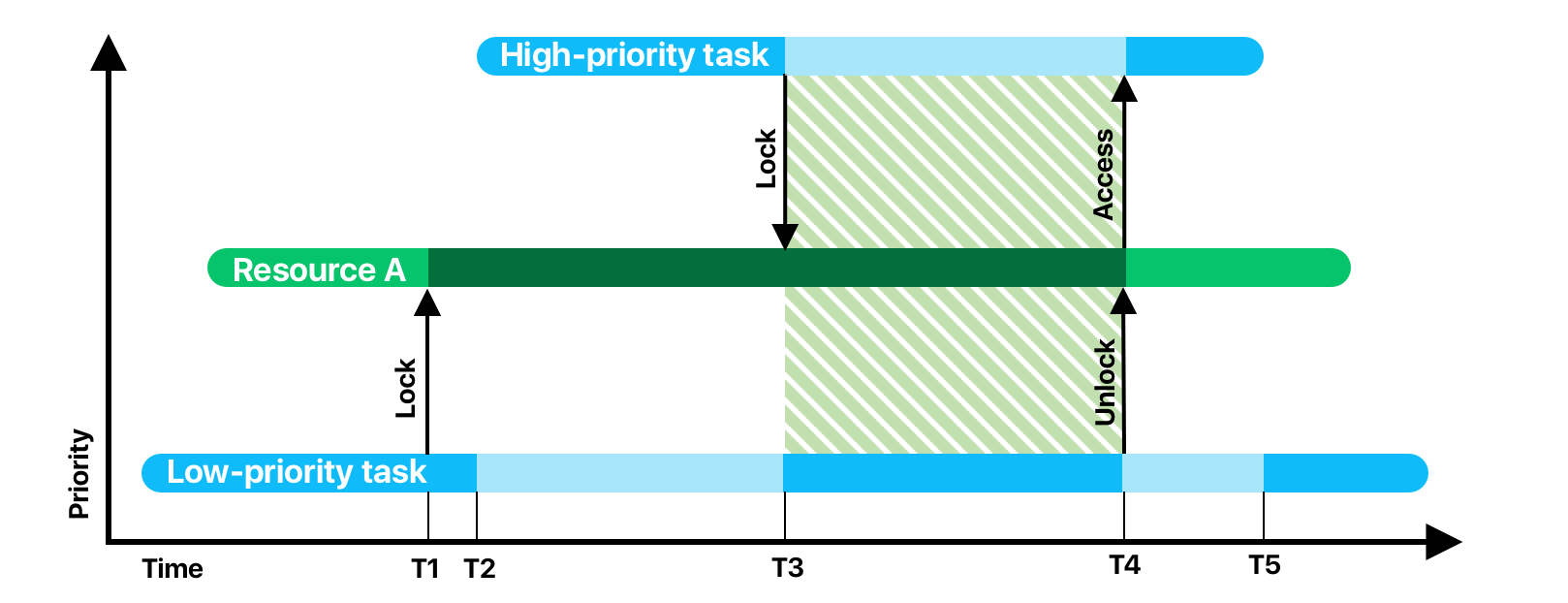

Priority inversion is an error that leads to a change in the priorities of threads, which was not intended by the developer.

Let’s say we have only two tasks with different priorities and only one resource, which, once again, is the database. The low-priority task is placed in the queue first. It starts its work and at time T1, it needs the database and locks access to it. Almost immediately after that, the high-priority task starts and preempts the low-priority one. Everything goes as planned until time T3, where the high-priority task tries to acquire the database. Since the resource is locked, the high-priority task is put into a waiting state, while the low-priority task gets CPU time. The time interval between T3 and T4 is called priority inversion. During this interval, there is a logical inconsistency with scheduling rules – the higher-priority task is waiting while the lower-priority task is executing.